A story around replication lag and Flow-Control.

Overview

In the last few months we had 2 main actors in the the MySQL ecosystem, ProxySQL and Group-Replication (with the evolution to InnoDB Cluster).

While I had extensively covered the first, my last serious work on GR, goes back to some lab version in the past years.

Given the decision Oracle made to declare it GA, and the Percona decision to provide some level of support to GR, I decide it was time for me to take a look at it again.

A lot of reviews were already done covering different topics. I saw articles about GR and performance, GR and basic functionalities (or lack of it like automatic node provisioning), GR and ProxySQL and so on.

But one question was coming up over and over in my mind. If GR and InnoDB cluster has to work as alternative to other (virtually) synchronous replication mechanism, what change or shift our customers must consider if they want move from one to the other.

In solutions using Galera, like Percona Xtradb Cluster (PXC), there is a main concept to which all of us must refer to. The cluster is data-centric, which at the end brings us to what matters, which is the data and its state, that must be exactly the same on each node at a given time (commit/apply). To guarantee this PXC and others use a set of data validation and FlowControl that at the end will make possible to the cluster dataset to be consistent on each node, respecting the main principle (be data-centric).

Immediate application of this principle is that an application can query ANY node in the PXC and be sure to get the same data, or to write and know that the data will be visible on all node (virtually) at the same time.

Last but not list, if a node is not consistent with the others, it will be excluded and must be rebuild, or inconsistency fix, before joining back.

Not a minor thing if you think carefully, and a very useful thing to have because allow you to transparently split write/read operations, or failover from one node to another with lees troubles, and more.

When I thought to GR (or InnoDB Cluster), I put myself in the customer shoes, and I asked to the other myself: “Aside all the other things we know (see above) what is the real impact of moving form PXC to GR/Innodb-Cluster for my application? Because when you mention me that GR is still using (basically) replication with binlogs and relaylog, also if there is a Flow-Control mechanism an alarm bell started to ring in my mind.”

My Answer was: “Let us do a POC, and see what is really going on in that terms.”

{autotoc enabled=yes}

The POC

Given that I setup a simple set of servers using GR, with a very basic application performing writes on a single Writer node, and (eventually) reads on the other nodes.Schema definition can be found here, mainly I had use the 4 tables from my windmills test suite, nothing special, or weird of specifically design for GR. Actually I had use this test a lot for PXC in the past, so was a perfect fit.

Test definition

The application will do very simple work, and I had thought to test 4 main cases:

- One thread performing one insert at each transaction.

- One thread performing 50 batched inserts at each transaction.

- 8 threads performing one insert to each transaction.

- 8 threads performing 50 batched inserts at each transaction.

As you can see nothing crazy, a quite simple operation.

Then I decide to test it using the following 4 conditions on the servers:

- Single slave worker FC as default

- Single slave worker FC set to 25

- 8 slave workers FC as default

- 8 slave workers FC set to 25

Again nothing weird or strange from my point of view.I had used 4 nodes:

- Gr1 Writer

- Gr2 Reader

- Gr3 Reader minimal latency (~10ms)

- Gr4 Reader minimal latency (~10ms)

Finally, I had to be sure I measure the lag in a way that allow me to reference it in a consistent way on all nodes.

I think we can safely say that the incoming GTID (last_ Received_transaction_set from replication_connection_status) is for sure the last apply on the master a slave node can know about.

This because network delay can prevent the last one to really be "received".

The other point of reference is the GTID_EXECUTED which refers to the latest GTID processed on the Node itself.

The closest Query that can track the distance will be:

1 |

select @last_exec:=SUBSTRING_INDEX(SUBSTRING_INDEX(SUBSTRING_INDEX( @@global.GTID_EXECUTED,':',-2),':',1),'-',-1) last_executed;

select @last_rec:=SUBSTRING_INDEX(SUBSTRING_INDEX(SUBSTRING_INDEX( Received_transaction_set,':',-2),':',1),'-',-1) last_received

FROM performance_schema.replication_connection_status WHERE Channel_name = 'group_replication_applier'; select (@last_rec - @last_exec) as real_lag

|

Or in case of a single worker

1 |

select @last_exec:=SUBSTRING_INDEX(SUBSTRING_INDEX( @@global.GTID_EXECUTED,':',-1),'-',-1) last_executed;

select @last_rec:=SUBSTRING_INDEX(SUBSTRING_INDEX(Received_transaction_set,':',-1),'-',-1) last_received

FROM performance_schema.replication_connection_status WHERE Channel_name = 'group_replication_applier'; select (@last_rec - @last_exec) as real_lag;

|

The result will be something like this:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 |

+---------------+ | last_executed | +---------------+ | 23607 | +---------------+ +---------------+ | last_received | +---------------+ | 23607 | +---------------+ +----------+ | real_lag | +----------+ | 0 | +----------+ |

The whole set of tests can be found here, with all the commands you may need to run the application (you can find it here), and replicate the tests.

I will focus on the results, or this blogpost will be far too long, but I invite you to see the details.

The results

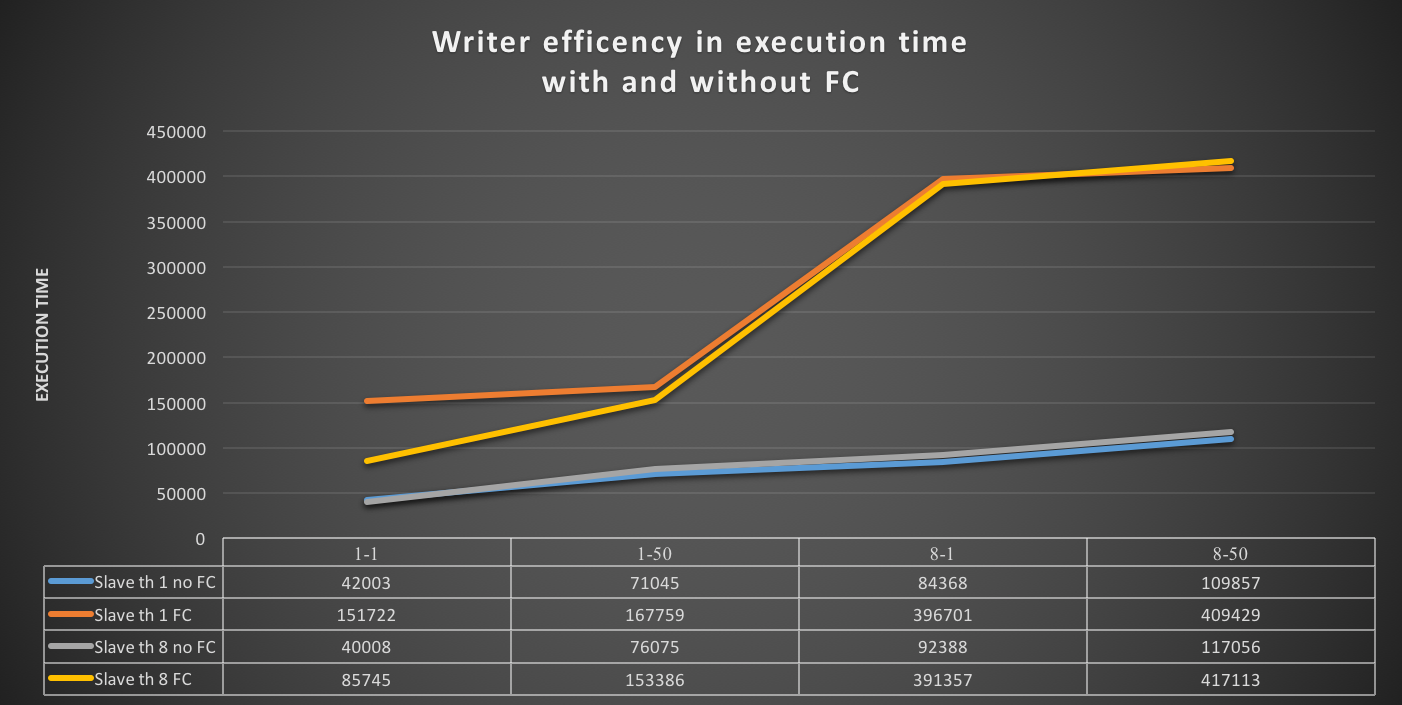

Efficiency on Writer by execution time & Rows/secHere using the raw data from the tests (excel spreadsheet available here) I was interested to identify if and how the writer is affected by the use of GR and FC.

Reviewing the Graph we can see that the Writer has a linear increase of the execution time (when using default FC), with the increase of the load, nothing really concerning and all in all expected also if the load is light, we will see after that the volume of rows at the end justify the execution time.

Different scenario if we use FC, the execution time increase significantly in both cases (single worker/multiple workers). In the worth case (8 threads, 50 inserts batch) it becomes 4 time higher the same load without FC.

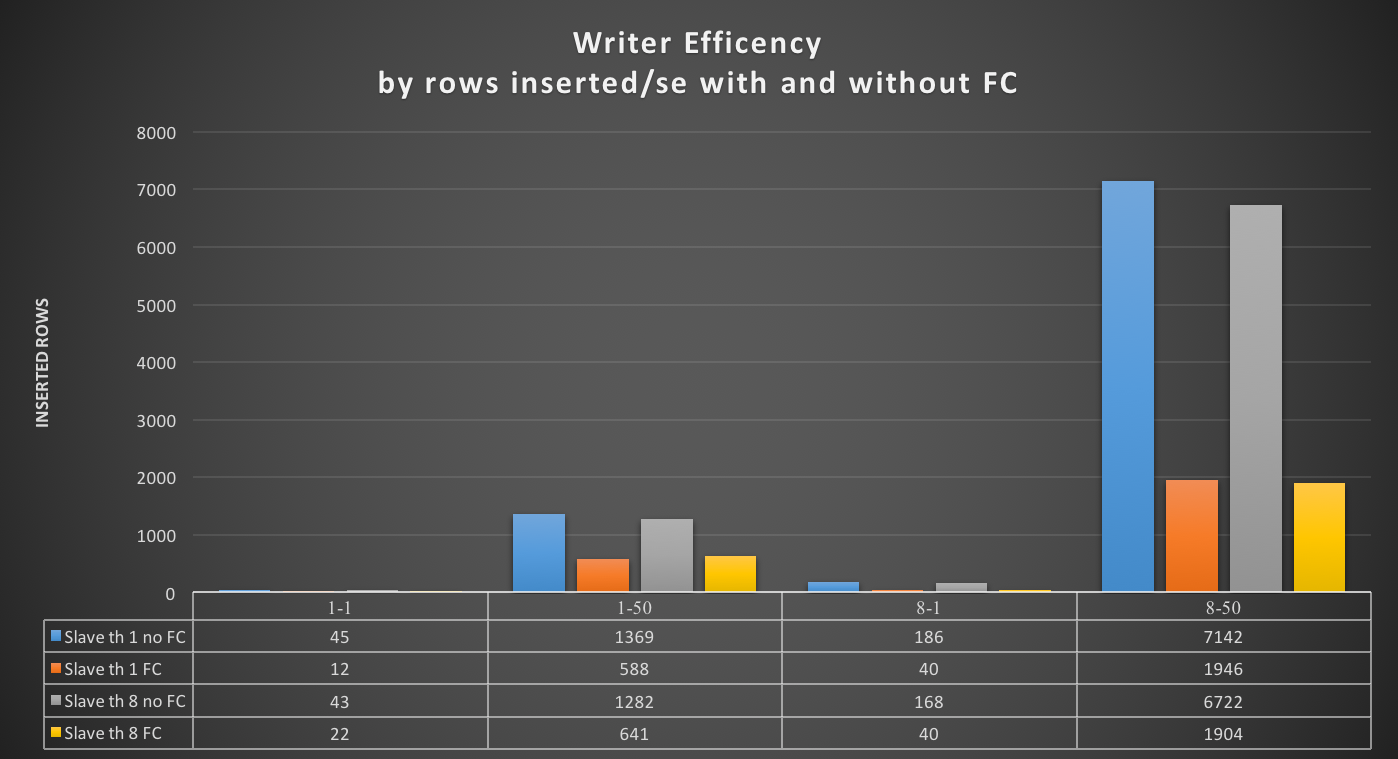

What happen to the inserted rows? In the application I trace the rows inserted/sec, as such is easy to see what is going on there as well.

We can see that the Writer with FC activated is able to insert less than 1/3 of the rows it can process without FC.We can definitely say, that FC has a significant impact on the Writer performance.

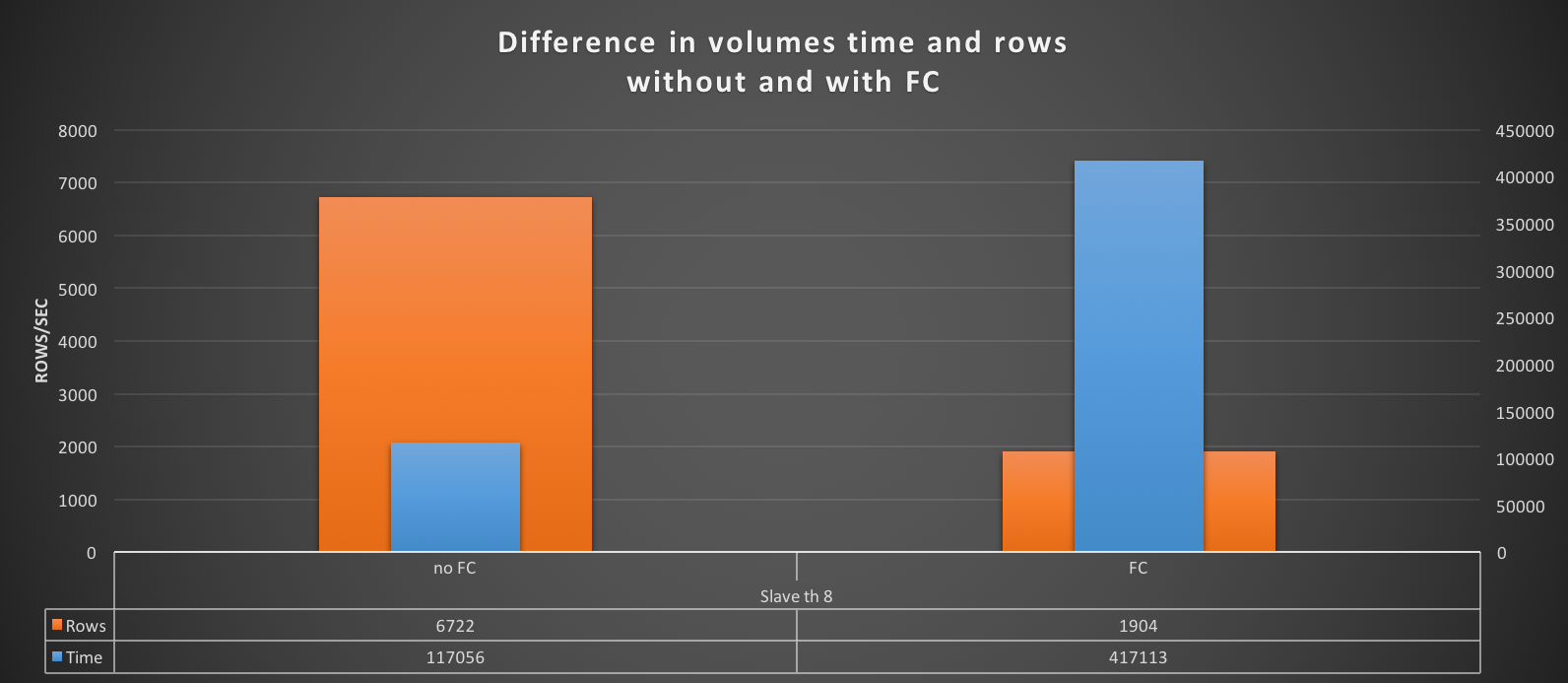

To clarify let see this graph:

Without FC the Writer is able to process a high volume of rows in a limited number of time (results from test 8 workers; 8 threads; 50 insert batch).

While with FC the situation changes drastically, the Writer will take a long time processing a significant smaller amount of rows/sec. In short performance will drop significantly.

But, hey I can be ok with that if this means to have a consistent data-set cross all the nodes.

At the end also PXC and similar, pay a significant price in performance to match the data-centric Principle.

Ok let see what happen on the other nodes.

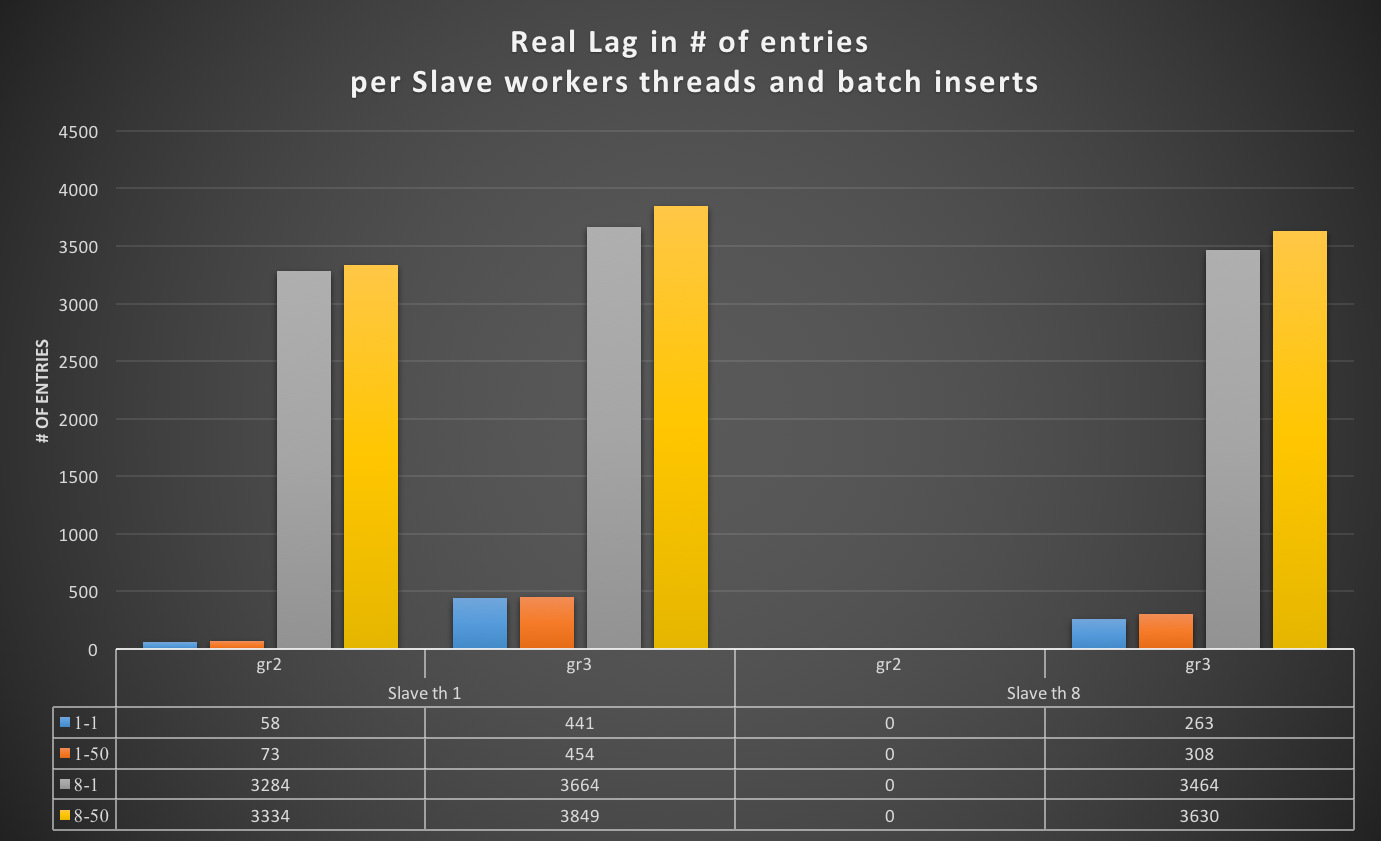

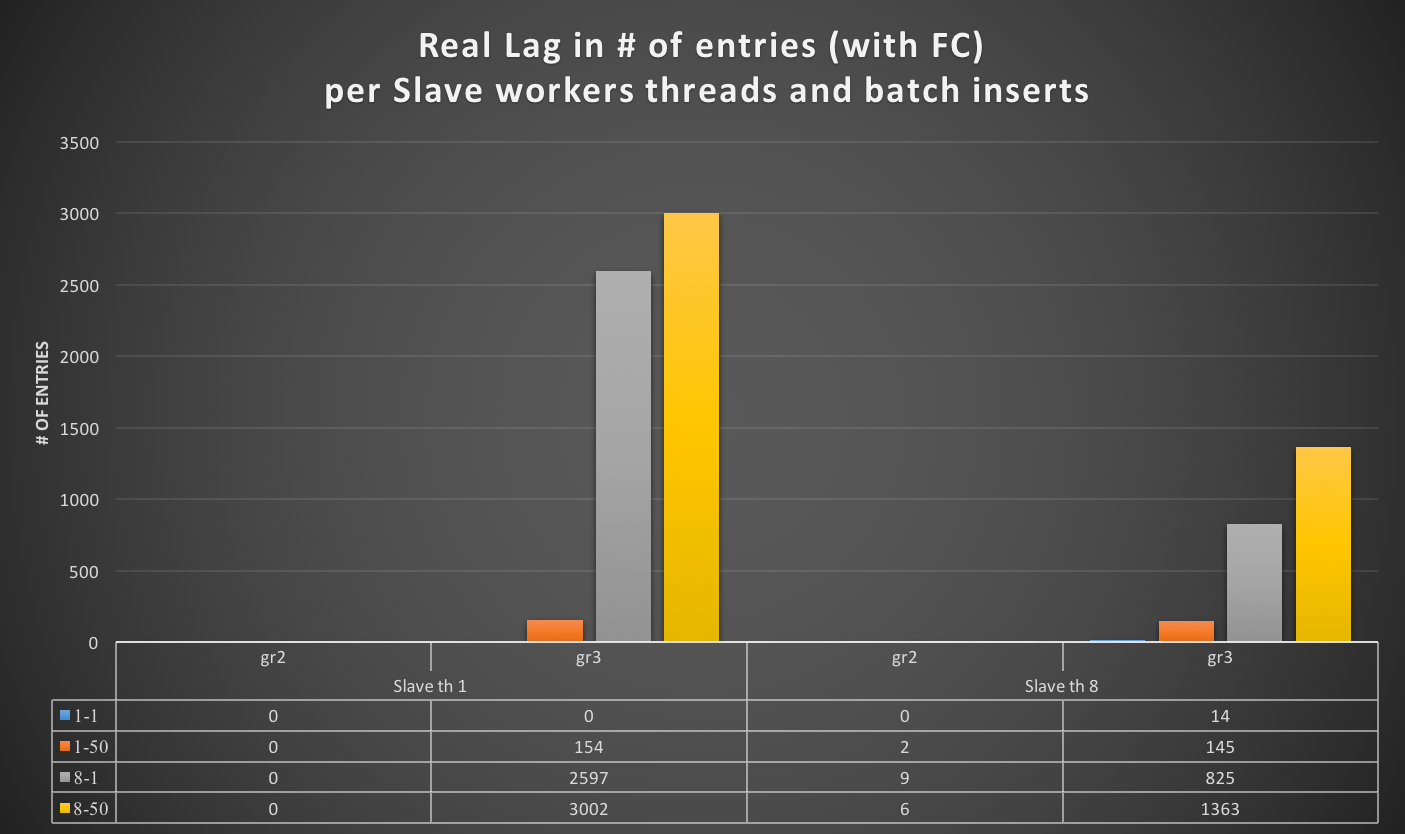

Entries Lag

Well, the scenario is not so good.

When NOT using FC, the nodes lag behind the writer significantly. Remember that by default the FC in GR is set to 25000 entries, I mean 25K of entries!!!

So what happens here is that as as soon as I put some salt (see load) on the Writer the slave nodes will start to lag.

When using the default single worker, that will be significant, while when using multiple workers, we will see that the lag will mainly happen only on the node(s) with a minimal (10ms network latency).

Sad thing is that is not really going down respect to the single thread worker, indicating the simple minimal latency of 10ms was enough to affect the replication.

Well time to activate the FC and have no lag.

Unfortunately, this is not going to be the case. As we can see the lag in case of single worker remain high also in Gr2 (154 entries).

While when using multiple workers, the Gr3/4 nodes are able to perform much better, and lag is significantly less, but still high ~1k entries.

It is important to remember that at this time the Writer is processing 1/3 or less of the rows it is normally able to do. It is important to note, that I had set 25 to the entry limit in the FC, and never the less the Gr3 (and Gr4) nodes are lagging more than 1K entries behind.

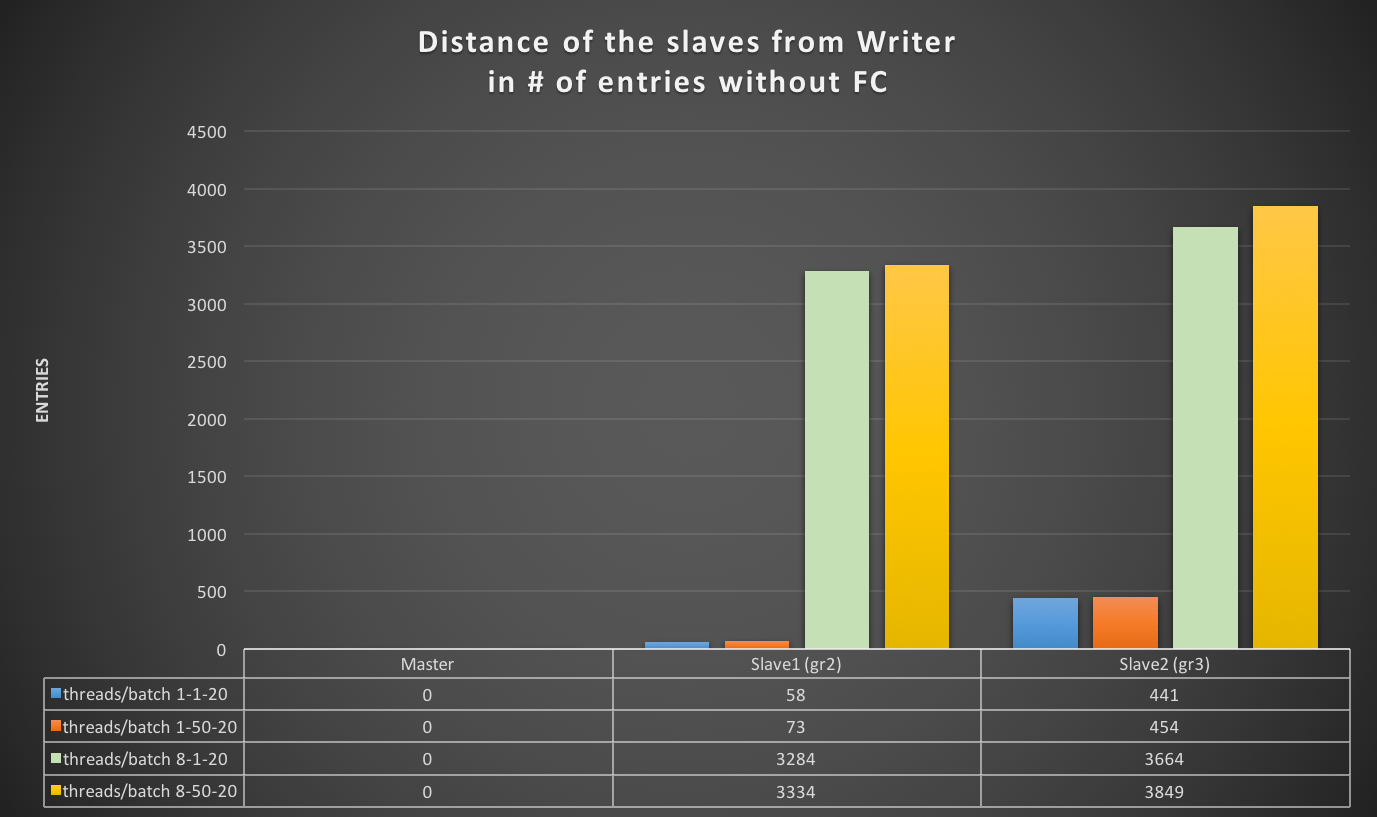

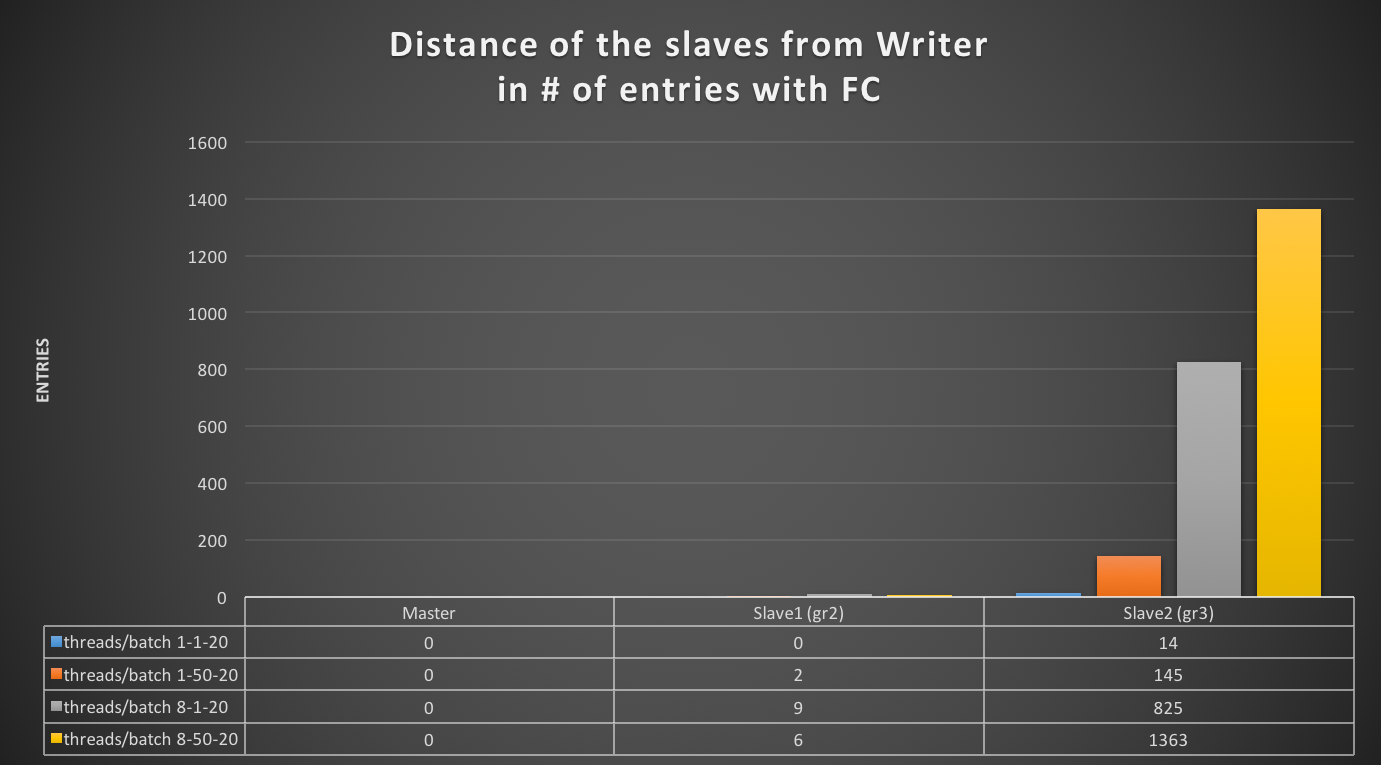

To clarify, let check the two graphs below:

Using the Writer (Master) as baseline in entry #N, without FC, the nodes (slaves) using GR, will start to lag behind the writer, in a significant way also with light load. The distance in this POC was from very minimal with 58 entries, up to 3849 entries in the case of higher load.

Using FC the Writer (Master) will diverge less, as expected, but also if it will have a significant drop in performance (1/3 or less), the Nodes will lag anyhow, worse case up to 1363 entries.Need to underline that we have not further way (I am aware of) to tune the lag and prevent it to happen.

Which means an application cannot transparently split Writes/Reads and expect consistency. The gap will be too high

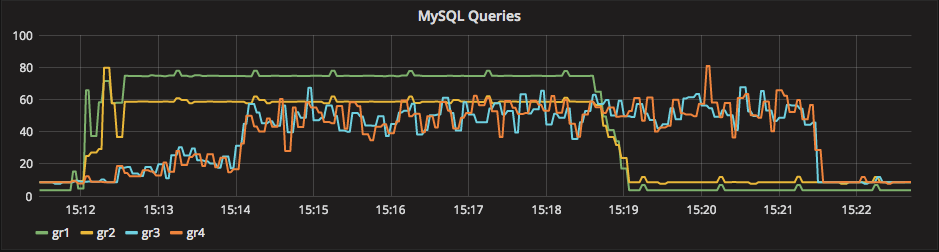

A graph that tell us a story

I was using PMM to keep an eye on the nodes while doing the tests. And one of the graph was really telling me that GR has still some “limits” if we want to consider it as the replication mechanism for a cluster.

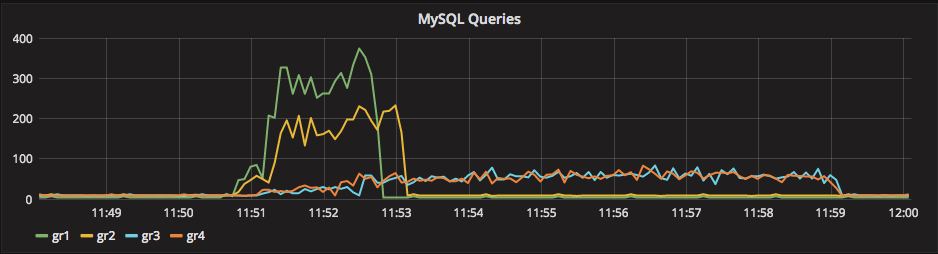

This graph shows the MySQL Queries executed on all the 4 nodes, in the 8-50 threads-batch with FC test.As you can see the Gr1 (the writer) is the first one to takeoff, followed by Gr2, the nodes Gr3 and Gr4 will require a bit more, given the binlog transmission (and 10ms delay), once the data is there, they match (inconsistently) the Gr2 node, this is an effect of the FC asking the master to slow down. But as seen previously, the nodes will never be able to match the Writer. At the end, when the load test is over, the nodes will continue to process the queue for additional ~130 sec. Considering that the whole load takes 420 sec on the Writer, this means that 1/3 of the total time on the Writer is spent AFTER on the slave to sync.

The above graph shows the same test without FC, is interesting to see how the Writer was going above the 300 Queries/sec while G2 stay around 200 and Gr3/4 far below. The writer was able to process the whole load in ~120 seconds instead 420, while Gr3/4 continue to process the load for additional ~360 seconds.This means that without FC set the Nodes will lag around 360 seconds behind the Master, while with FC set to 25, they will lag 130 seconds.

A significant gap.

Conclusions

Going back to the origin, and the reason why I was looking to this POC.

I, as customer of myself, think that my application(s) will not be a good fit for GR, given I have set PXC to scale out the reads, and be able to efficiently move my writer to another when in need to.

GR while based on a very interesting concept it is still based on asynchronous replication (as my colleague Kenny said). I am sure it could make sense in many other cases, but it cannot be compare to solutions that are based on virtually synchronous replication; and it still requires a lot of refinement.

On the other hand, all the applications that can afford to have a significant gap between writer and readers, will probably be fine with that.But I raise another question … was not standard replication already covering that?Reviewing the Oracle documentations (https://dev.mysql.com/doc/refman/5.7/en/group-replication-background.html) I can see why GR as part of the InnoDB cluster, can help me in improving HA, when comparing it to standard replication.But I also think it is important to understand that GR (and derived solutions like InnoDB cluster) are not comparable or a replacement of data-centric solutions as PXC. At least up to now.

Good MySQL to everyone.

References

https://dev.mysql.com/doc/refman/5.7/en/group-replication.html

https://dev.mysql.com/doc/refman/5.7/en/mysql-innodb-cluster-userguide.html

http://lefred.be/content/mysql-group-replication-understanding-flow-control/